T13:56:11.836Z ERROR awss3/collector.go:99 SQS ReceiveMessageRequest failed: EC2RoleRequestError: no EC2 instance role foundĬaused by: EC2MetadataError: failed to make Client request T13:56:11.835Z INFO registrar/registrar.go:137 Registrar stopped T13:56:11.834Z INFO registrar/registrar.go:166 Ending Registrar T13:56:11.834Z INFO registrar/registrar.go:132 Stopping Registrar T13:56:11.834Z INFO beater/crawler.go:178 Crawler stopped S3 bucket arn var.bucketarn: arn:aws:s3:::mybucket Bucket list interval on S3 bucket var.bucketlistinterval: 300s Number of workers on S3 bucket. T13:56:11.834Z INFO cfgfile/reload.go:227 Dynamic config reloader stopped T13:56:11.834Z INFO beater/crawler.go:158 Stopping 1 inputs In order to try filebeat on production, i launched 1.1k instances of filebeat on my production boxes, each monitoring a couple of files in its boxes and sending data to 3 central logstash servers, which are pushing those data after some modifications and droppings to elasticsearch cluster. T13:56:11.834Z INFO beater/crawler.go:148 Stopping Crawler Star 11. T13:56:11.834Z INFO beater/filebeat.go:515 Stopping filebeat opened 07:54PM - 19 Dec 19 UTC nathankodilla With filebeat 7.5. Probably the SQS message is in a different format than what we are supporting in Filebeat S3 input. Shared_credential_file: /etc/filebeat/fdr_credentials Hi Thanks for posting here This looks like a bug to me.

But I'm running into issues just getting one filebeat instance to successfully fetch the data.

#Filebeats s3 plus

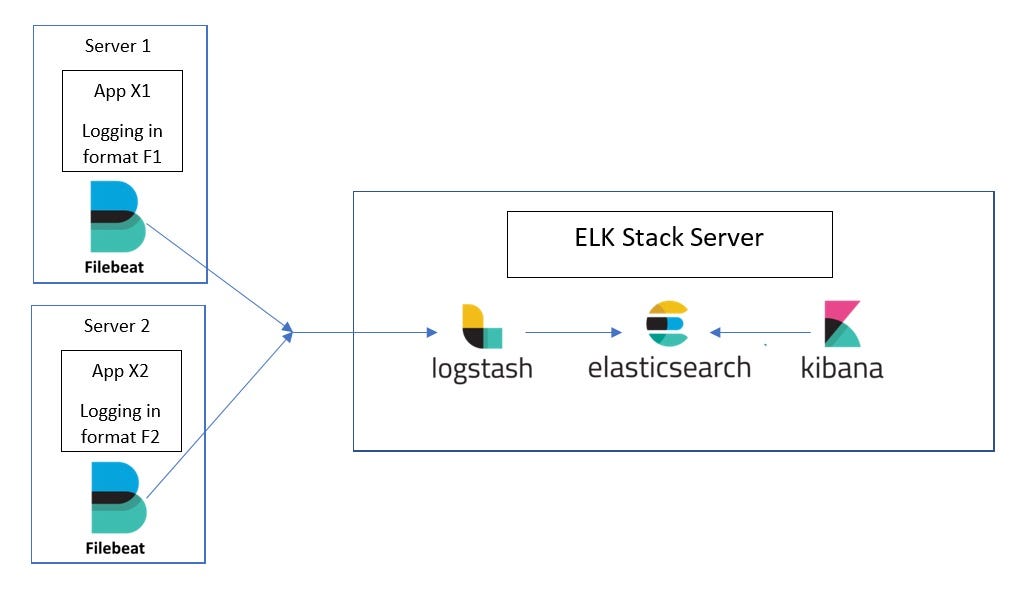

It would certainly be easier to use filebeat when just getting startedĪnother issue is that you must run logstash on each application instance plus the ones you need for ingestion into elasticsearch.I'm interested in using Filebeat to fetch CrowdStrike Falcon Data Replicator (FDR) logs with the aws-s3 plugin, and use its parallel processing functionality due to the sheer volume of data FDR produces.

#Filebeats s3 code

If you already have Filebeat and you want to add new sources, check out our other shipping instructions to copy&paste just the relevant changes from our code examples. Logz.io has a dedicated configuration wizard to make it simple to configure Filebeat. The json-file supported by file beat would work out of the box here. Filebeat is often the easiest way to get logs from your system to Logz.io.

#Filebeats s3 windows

Describe a specific use case for the enhancement or feature: Reading XML based Windows event logs from S3 that are newline delimited, but the XML itself contains strings with newlines. One big downside: you cannot use docker logs for quick inspection anymore because aws doesn't offer dual logger output. When reading log files from S3 users should be able to specify the same multiline options that are available with the log input. Major downsite: There is a race-condition with this approach where you will lose the initial logs from containers that start before the logstash container. And then push the logs to s3 via logstash output. Then you should see filebeat binary file under the same directory beats/x-pack/filebeat./filebeat modules enable aws should enable the aws module, then you can use the elb fileset there.

#Filebeats s3 update

The only alternative is rather complicated - you have to configure the aws ECS agent to support gelf logging and then use gelf logging to logstash. What I do is go to beats/x-pack/filebeat, run mage update & mage build. I can imagine 100 other usecases but this one is the current one that would have simplified my life. When using a service like aws elastic beanstalk, it is very handy to push logs to s3 for persistence. It would be very helpful to allow filebeat to output to s3 directly.Ĭurrently, if one wants to store logs on s3, logstash is required.

0 kommentar(er)

0 kommentar(er)